Prologue

This post is my take on reviving an old project (the last commit was 3 years ago) born around 2007/2008 at Nokia Research Center and written in Erlang. What was exciting for me is the fact that Disco project is capable of running Python MapReduce Jobs against an Erlang core, how awesome is that! — Erlang is a synonym for parallel processing and high availability.

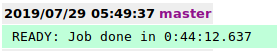

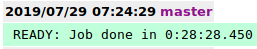

I successfully built it though and ran a 250M records dataset which is 10GB+ in size using a Python MapReduce job that finished in 28 minutes (improved from 44 minutes) using a cluster of 3 EC2 free-tier t2.micro instances. Imagine if not using a MapReduce approach, this could take days to process. The data pre-processing was done using C++17 as it ran much faster than its Python counterpart (Benchmarking is for a later post). Comparison screenshots below:

It felt like wreck diving, just phenomenal. I also started learning Erlang and I would be documenting my attempts in making Disco Project rise again. Interested? Get in touch! 😄

A Little History

The Disco Project was initiated by Ville Tuulos (Erlang Hacker at Nokia Research) who started building Disco in 2007.

Disco is an Erlang / Python implementation of the Map/Reduce framework for distributed computing. Disco was used by Nokia and others for quick prototyping of data-intensive software, using hundreds of gigabytes of real-world data.

But, no active development any more, what happened? I have no idea, some users suggested upgrades to the latest Erlang version and also some PRs for Python 3 upgrades, but no one is currently actively working on it as far as I know.

The Disco Project

Installing Disco

Prerequisites

I faced all kinds of issues while installing Disco on an Ubuntu EC2 instance on AWS. The latest Erlang version didn’t work out of the box. So basically these are the prerequisites stated in the Disco project documentation:

- SSH daemon and client

- Erlang/OTP R14A or newer

- Python 2.6.6 or newer, or Python 3.2 or newer

Come on Erlang/OTP R14A? The latest release at the time of this writing is Erlang/OTP 22, which is indicated as a major release by the Erlang website.

I did some research and was able to install a Debian package for Erlang/OTP R16B02 using the following commands on an Ubuntu 18.04 system,

First install some dependencies for Disco, including Python and making the default Python binary point to Python 2 (I didn’t get the Disco project from Github to work with Python 3, might try to upgrade it later):

| |

Building Disco

Download the Erlang Debian package and install the Erlang/OTP R16B02:

| |

Now let’s clone the Disco Project from Github and start building it:

| |

Note: If you decide you want to use DiscoDB (more on that later), then you can install it using these commands:

| |

Or if the above doesn’t work try building it using Python instead:

| |

Now you should have working Disco Project binaries on your system.

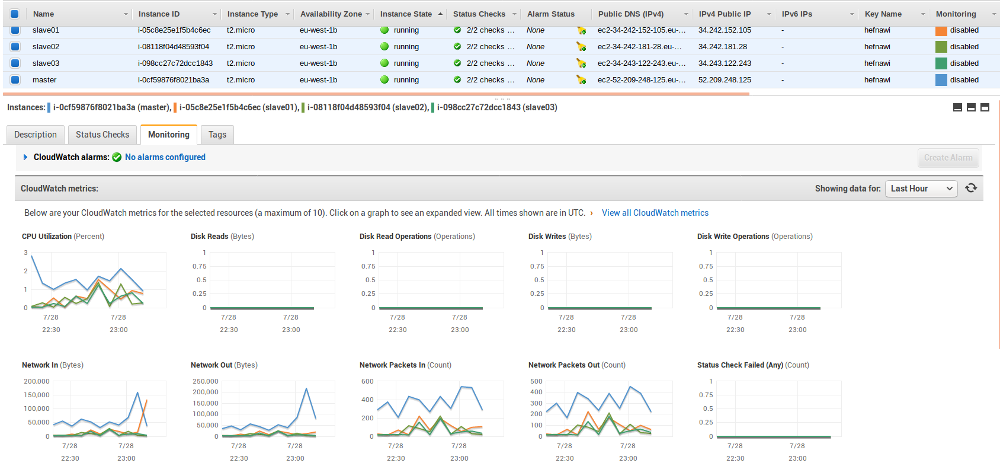

Of course, Disco must be installed on all nodes in the cluster, in my case I just created an AMI image from the master node on Amazon’s EC2 and then launched new instances for the slave nodes using the same Amazon EC2 image so that I do not repeat the installation process.

Setup the Cluster

I will be using an AWS EC2 instances for the Disco cluster, with one master node and 3 worker nodes. Of course, you can use a virtual machine or any other method for deploying the clusters to your liking.

Before starting the Disco daemon we should first create ssh key pairs and duplicate the .erlang.cookie to all nodes on the cluster.

| |

Now run these steps on the master node:

| |

Change the hostname for the current node, I assume you are currently on the master node:

| |

To make that change permanent, open the /etc/hostname file:

| |

Now change that file’s content to:

| |

You might need to log out and then back into the ssh session to see that change in action.

Open the /etc/hosts file to declare a hostname for each of the nodes in the cluster:

| |

Add the following lines depending on your private IPs for each of the nodes:

| |

Propagate configuration files

Next, you should duplicate all configuration files across all the slave nodes in the cluster. This could be easily done using a tool like Ansible, but here I will explain how you can do it manually which you can then translate to your most favourite automation tool.

- Copy the public ssh key to all the slave nodes:

| |

- Copy the Erlang cookie to all the slave nodes, the following command can be run from the master node:

| |

- Update the hostname as well as the

/etc/hostsfile on each of the slave nodes, basically repeat the following for each slave node on your cluster:

Change the hostname for each of the slave nodes, for example:

| |

Then make that change permanent, by opening the /etc/hostname file:

| |

Now change that file’s content to:

| |

Now open the /etc/hosts file to declare a hostname for each of the other nodes in the cluster, this is identical for all nodes including the master node:

| |

Add the following lines depending on your private IPs for each of the nodes:

| |

Web UI

To start viewing the Web UI in your browser the most secure way (without exposing any ports on the actual cluster) is to create an SSH tunnel and access it from the mapped port on your localhost using this command:

| |

You can now simply view the Web UI by pointing your browser to http://localhost:8989.

Next, we shall add all the slave nodes under the system configuration page by clicking on the configure link on the right sidebar:

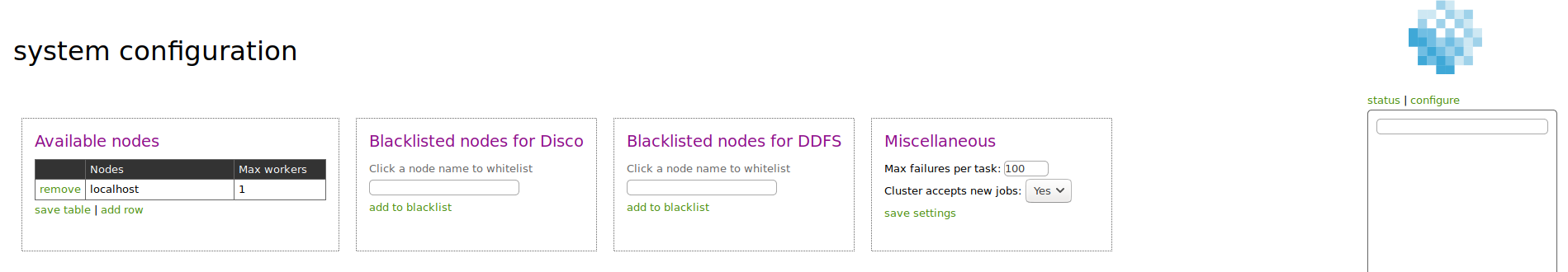

The initial configuration looks like this (click on the image for a larger view):

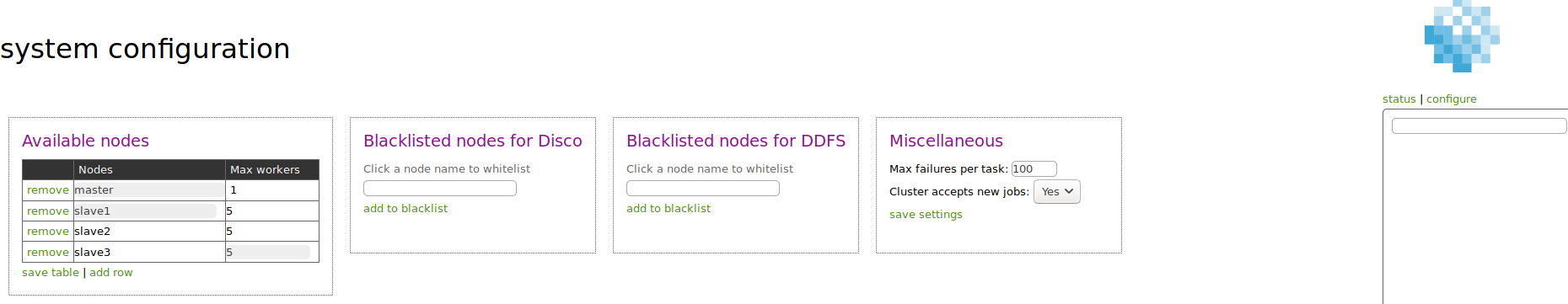

And once we add all slave nodes — I am just using their hostname and inserting each row using the add row button and finally save table — it should look like this (click on the image for a larger view):

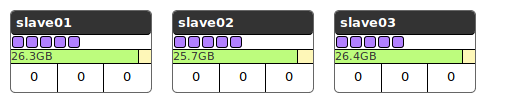

After adding all the slave nodes you should have something similar to this on the status page (showing slaves only) — I was also using different hostnames for this cluster, i.e. slave01 versus slave1 so don’t worry about it:

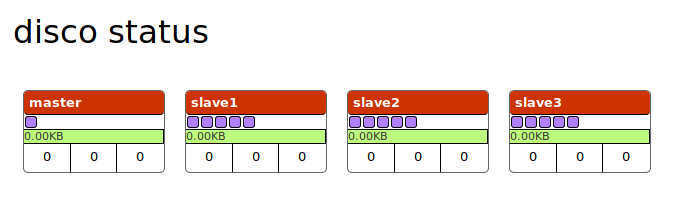

If something is wrong with the cluster setup for the Disco Project it would look like this with red headers:

Next in Part 2

In Part 2 of this article, I will explain how to use the Disco Distributed Filesystem (DDFS) as a distributed storage layer and I will also explain a basic Python job to run a MapReduce on big data. So hope you enjoy that one as well.

TODO a.k.a Now What?

- Benchmark the performance against a distributed computing framework, some ideas below,

- Apache Spark

- Apache Storm

- Ceph

- Google Big Query

- Pachyderm

- HPC

- Presto

- Create an Ansible playbook for installing Disco on a cluster

- Create a Packer AMI image for use with AWS projects

References

As always this little box below is your own space to express your ideas and ask me anything, feel free to use, you just need your Github account 😉